Context

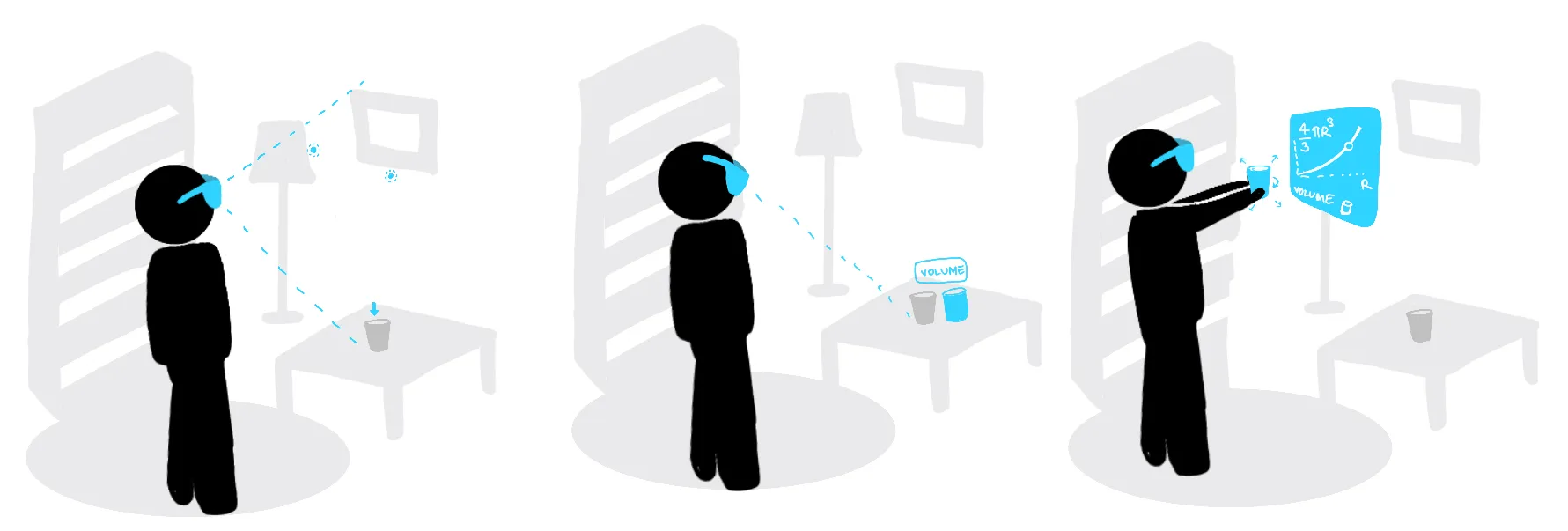

For my Master's thesis, I'm building a platform to support curiosity with XR. Learners would be able to learn math concepts such as the volume of a cylinder when looking at a cup to biology concepts on how plant cells look like when near a plant.

M.Des. Thesis

Supervised by Dr. Alexis Morris, Dr. Ian Clarke

MR Headset support

Meta Quest Pro & Hololens 2

Tools

Unity, OpenXR, Oculus SDK, Socket.io, PyTorch

Timeline

2022 - 2023 (Lanuching soon on Meta Store)

How can XR support curiosity?

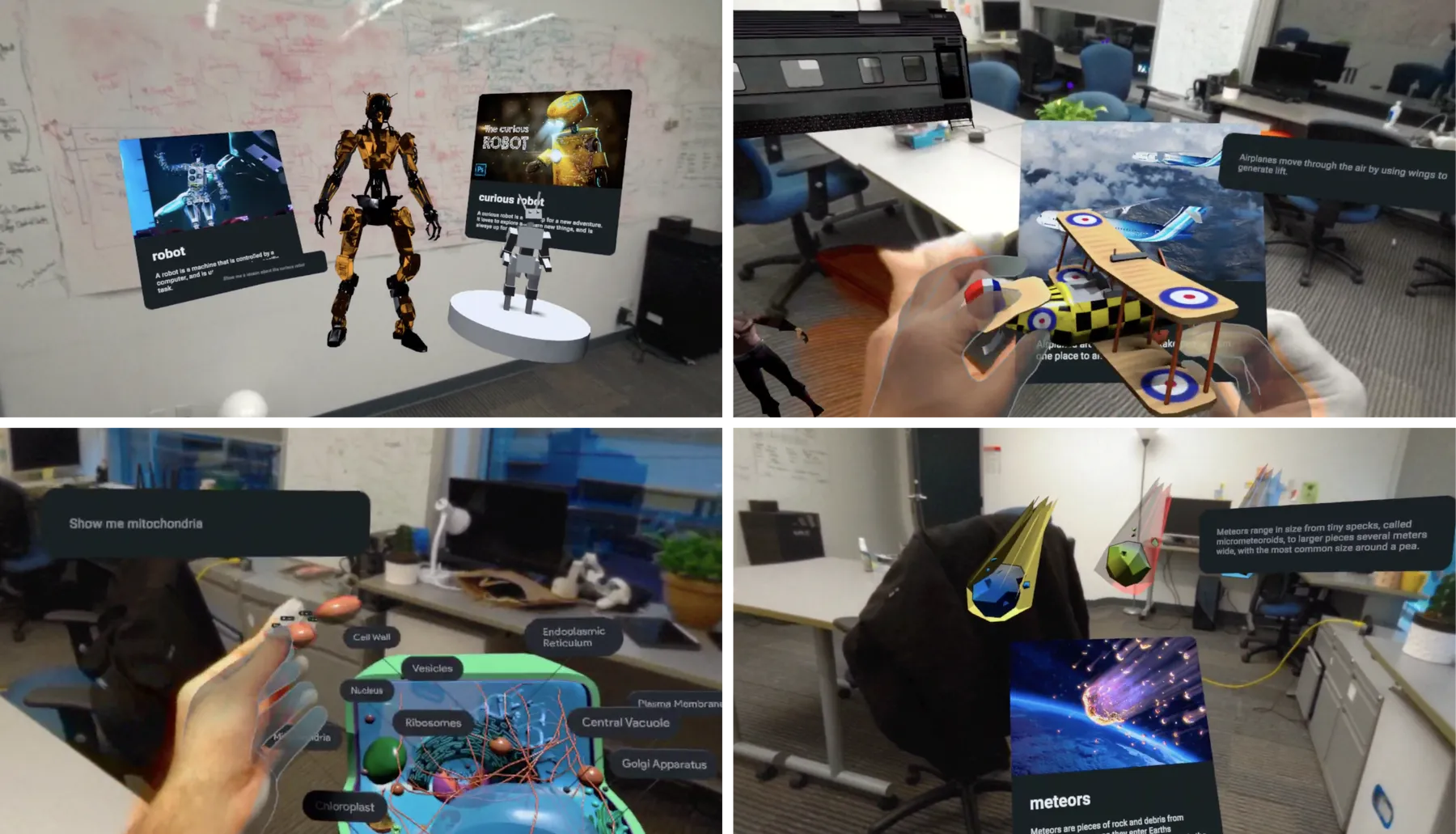

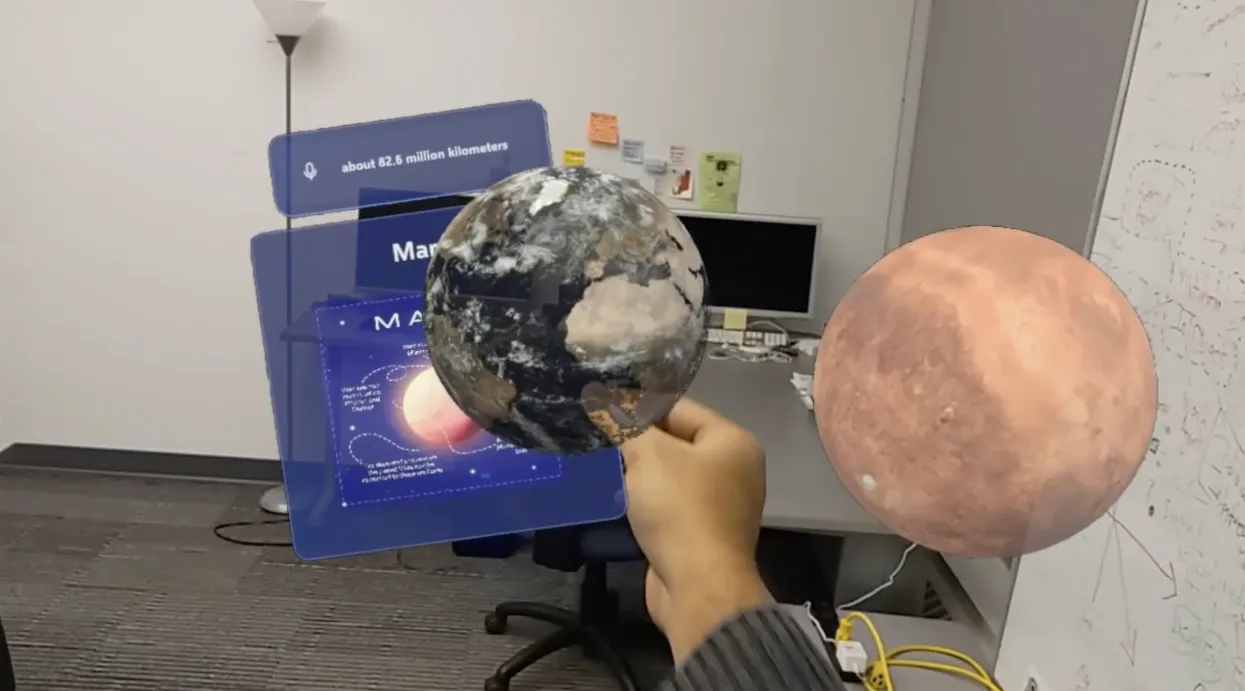

I am building an app to support XR learning experiences. I present an approach to map real-world context for multi-modal learning using ChatGPT, SketchFab API and other ML agents to support curiosity and improve knowledge recall. The prototypes allow users to learn languages, science, history, general knowledge and mathematics concepts through the objects around them and the environment.

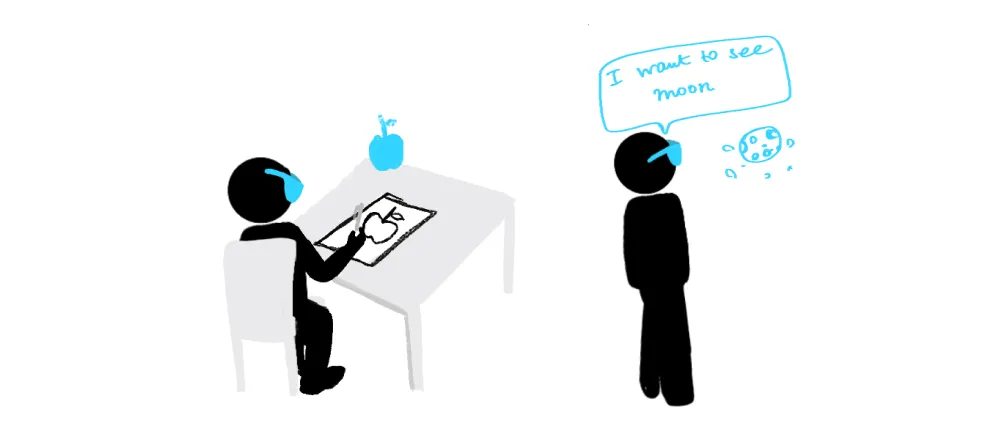

Help learners visualize things

The concept of turning sketches into objects such as a chair, car, etc. is interesting and can be extended to drawing objects which don’t exist like purple apples or magical worlds of mushrooms with abstract gradients as the background. The interaction techniques for this expression in 3D spaces could be through 2D sketches and could provide learners with a natural way to imagine and create 3D spaces/objects. This technique could support curiosity by not limiting the learner’s imagination to the paper.

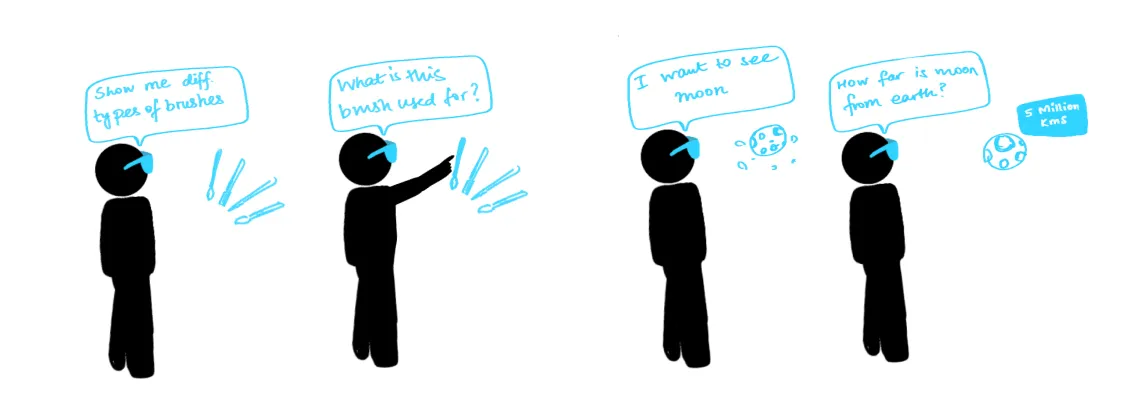

Storyboarding

This prototype aims to understand the benefits and scope of a Mixed Reality visualization support system. To map out the use case of recognizing user intents such as drawings or speech and augmenting them in the MR space, I drew some use case sketches to visualize this tool.

User sketch or speech is used to show 3D models from database

User sketch is used to show 3D models rendered real-time

Outcomes

The demo is built on Microsoft Hololens 2, with real-time object detection from the sketch using a custom-trained model. The model is trained on the Google Quickdraw dataset and works with around 350 common objects (chairs, cars, apples, etc.). This allows for real-time searching for models such as an “apple”, filtering and finding a suitable model that is supported by Hololens 2, downloading it, and then rendering it in front of the user. The user can then use their hands to interact with these objects to either scale, rotate or move them in the space.

Saying "Can I see mars?" shows 3D model of mars

Sketching apple renders 3D model of an apple

Help them learn anything they want to learn

Storyboarding

After brainstorming and sketching ideas, some of the use case scenarios could be the following. The user could ask questions about the model, parts of a model or one of the many models loaded. I used Wit.ai to recognize user intent and ChatGPT API to support learners with as many questions as they had while exploring these models.